Is Generative AI Just a Shiny New Toy?

The tech under hyped-up GenAI is not 'intelligent'. Now the hype has burst, with sloppy returns against the billions of investment.

I spent more than a decade working in technology — as a product developer 💻 in my first avatar, before it was a thing, and then consulting for technology services firms. Over these years of scouring the web, I found little content on technology services. This is a trillion dollar 💲 industry that provides thousands of jobs and creates wealth for its talent.

But no one writes about this. Not consistently and clearly at least.

Welcome to TechScape.

I write about the latest and greatest 👑 in the world of technology services companies — digging into the opportunities ✅ and challenges ⛔ that tech services leaders are facing or likely to encounter.

I write based on my working experience ⚒ in this sector and synthesis of my copious reading 📖 that I do as part of my work.

Hi,

Today’s post is a detour. My posts are targeted at the tech services firms and events affecting their agenda — CIO spend trends, outsourcing trends, operating model changes, latest in offerings and GTM motions. But, lately, GenAI has become an impossible topic to ignore, even for tech services firms. Most of the discourse on GenAI refers to product companies, which are at the forefront of new applications/products and not technology services firms that provide services on top of those applications. Despite that, tech services firms such as Accenture committing $3 Bn investment are in the middle of this hype curve, which makes GenAI a topic of interest for the tech services firms as well.

So far GenAI has struggled to generate returns including the tech services firms. Usage is non-recurring, concerns related to accuracy and security are serious and experts are divided on the novelty of the technology. The lack of financial impact was reflected in equity markets as NASDAQ lost $1 Tn last month over AI jitters, slamming firms with large investments and little to no results to show for it.

❓ The question is — Is GenAI a breakthrough tech, suffering only from the aftermath of a typical mega hype or is the underlying technology really underwhelming?

Let’s get to it.

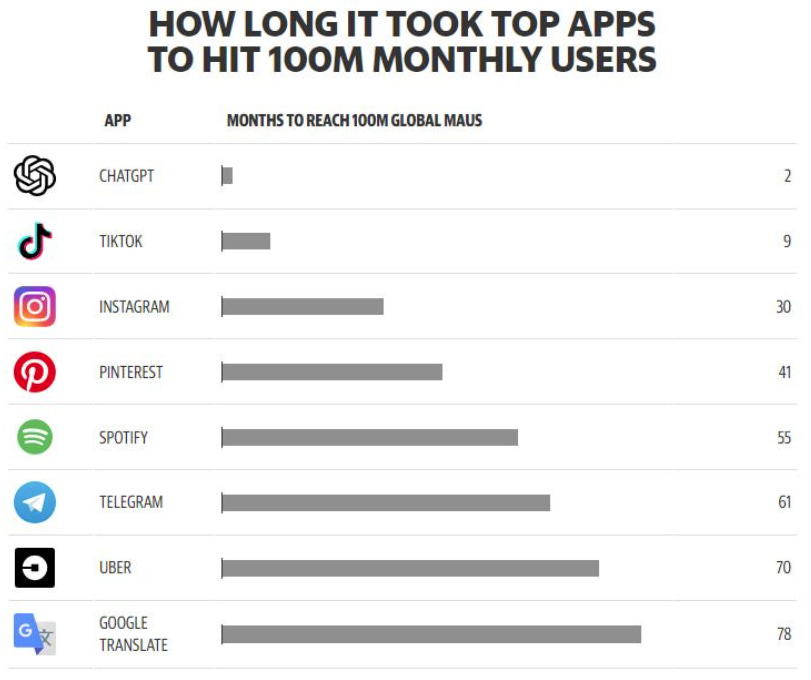

In late 2022, OpenAI, powered by their dynamic founder Sam Altman, launched the most impactful technology of this decade — ChatGPT. ChatGPT is an AI chatbot built on OpenAI's LLMs such as GPT-4. It was heralded as the next frontier tech — redefining AI standards by proving that machines can “learn” the complexities of human language. Unsurprisingly when OpenAI released the demo on 30 Nov 2022, it went viral. Soon it reached a staggering 100Mn users in 8 weeks, lightspeed faster than the next record holder TikTok at 37 weeks.

For the first time, people could interact with AI systems that not only automate but create– something which only humans were capable of. It appeared that the bastion of human intelligence was broken as AI could now reproduce what humans considered intelligent work and believed it would be beyond the reach of machines — writing essays, coding programs, and generating art.

With this media hype, every company jumped on the bandwagon. Microsoft kicked it off by launching GitHub copilot, an absolute game changer. Google launched its own ChatGPT competitor, Gemini. Countless AI apps mushroom, from web search (Perplexity), and writing (Ryter, Jasper) to image generation (Midjourney)

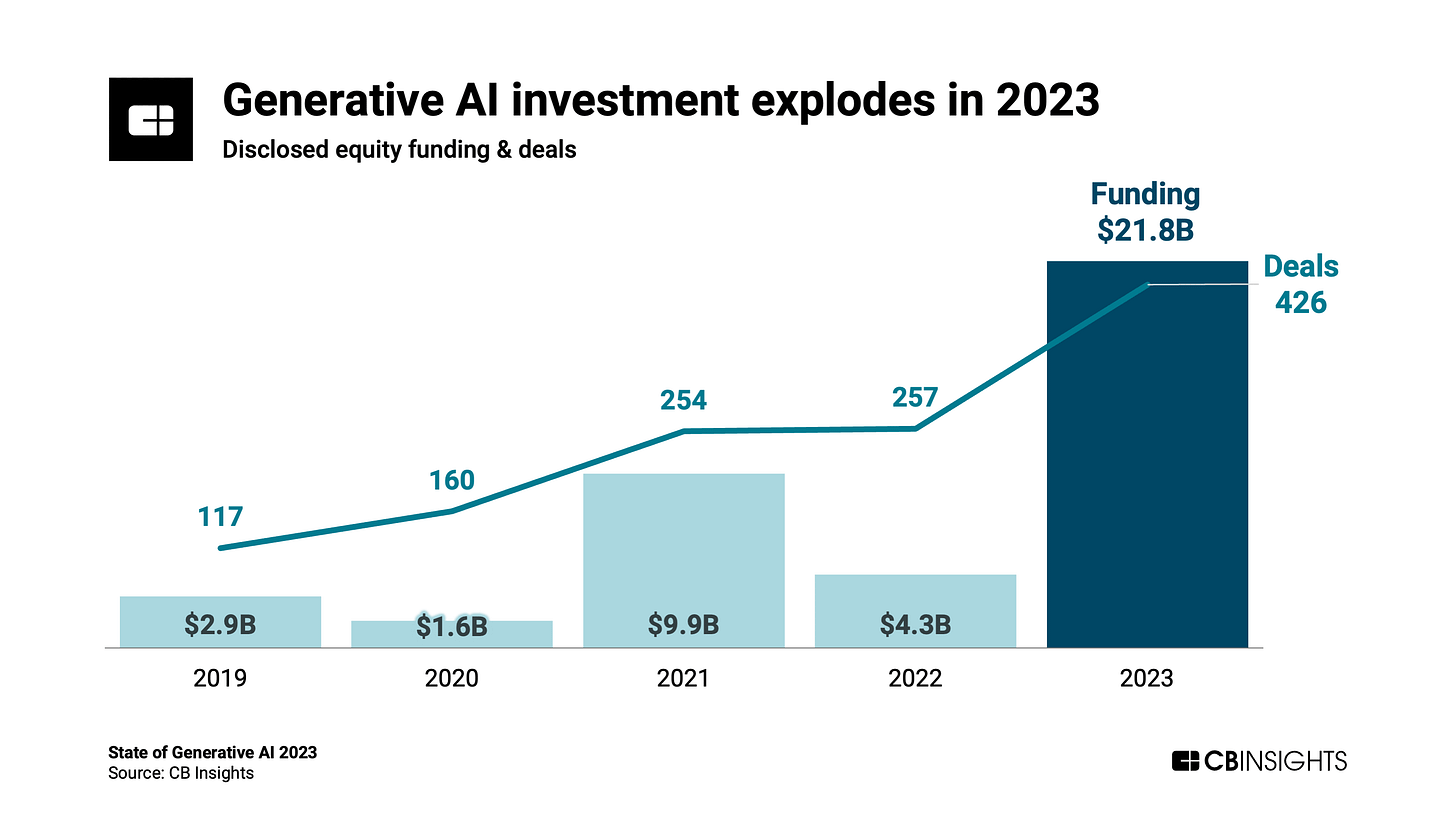

A combination of all of this resulted in $21 Bn of investment in 2023, a record by a handsome margin. For context, the investment in 2023 alone was more than the combined investments in the preceding five years. That was massive.

The Hard Landing

While 2023 saw the maniacal obsession with everything GenAI, come 2024 the pendulum swung.

2024 presented a different story. The start of 2024 brought some hopes of a growth recovery as CIO surveys showed an uptick in spending in key areas. The macroeconomic doom and gloom started to abate as the central banks across major economies such as the ECB, and Bank of England started to cut rates (The Fed has not cut rates yet) to spur growth. Green shoots of growth appeared as technology services firms, who were instrumental in taking GenAI to enterprise customers, were starting to show 5%-6% growth. As the dawn of the resurgent growth broke, investors and thought leaders questioned the growth and impact of GenAI after the billions of dollars in investments.

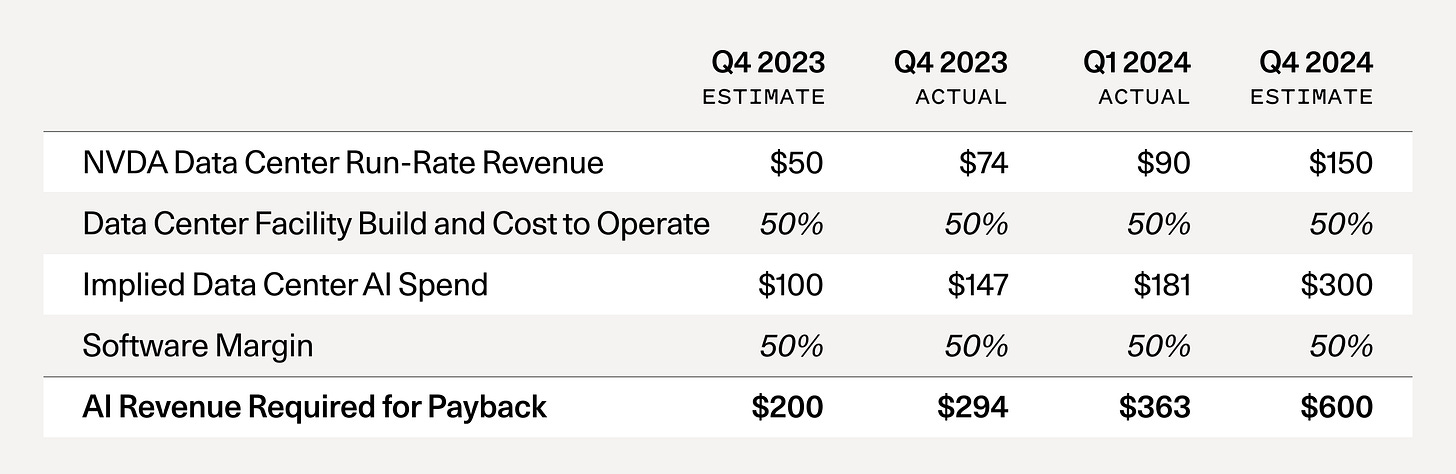

There were no answers. GenAI had not delivered on the promised results. Most of the exercises were in the pilot stage, with limited production-grade implementations. Sequoia’s partner, David Cahn had started to put pressure on AI back in Sep 2023, long before the hype was picking up when he published a scathing piece on the missing $200 Bn in AI impact, followed it up with an even bolder piece by challenging that AI was missing a whopping $600 Mn in impact.

The floodgates opened soon after. Goldman Sachs, the marquee investment bank with $2.8 Tn of assets under supervision, said there was too much spend with too little benefit.

GS Head of Global Equity Research Jim Covello goes a step further, arguing that to earn an adequate return on the ~$1tn estimated cost of developing and running AI technology, it must be able to solve complex problems, which, he says, it isn’t built to do. He points out that truly life-changing inventions like the internet enabled low-cost solutions to disrupt high-cost solutions even in its infancy, unlike costly AI tech today.

It was increasingly clear that GenAI was not generating the expected returns, and the hype road of GenAI has come to an end.

Despite the consensus that GenAI was struggling to show value, the rationale varied widely — from expensive tech, lack of enterprise use cases, and security issues to the immaturity of the underlying technology.

Looking under the hood

As transformative as GenAI promised to be, very few understand what is under the hood. I am not promising a look under the hood as the latest LLMs are intricate and require dedicated time, directed effort and expertise to understand. Though for the interested, Will Douglas Heaven, from MIT, wrote a full-length essay on AI.

But let’s look at the basics.

First, what is GPT? Generative Pre-trained Transformers.

GPTs are advanced neural networks used in natural language processing. Fundamentally, GPTs break down language via statistics to ‘understand’ the language. It chooses the most statistically probable word or answer (which is why GPT-generated text reads monotonous).

I highlighted ‘Pre-trained’ because pre-training is critical. The LLM is pre-trained on a massive amount of text data — books, articles — to ‘learn’ the statistical patterns and structures of natural language. This allows the model to develop a general understanding of language.

The biggest issue was what everybody thought GenAI is. It was ‘marketed’ as a general-purpose intelligent machine that can perform complex tasks with basic guidance via text, almost like providing directions. After the initial fervor abated, people realized the true nature of the technology and its limitations.

GPT is not a general-purpose intelligent machine. It is a narrow machine, which operates well in a given job, with strictly defined rules and guardrails. Consequently, applications were limited to a few functions such as customer service with firms like Kore.ai, Observe.ai, Yellow.ai or in Sales & marketing automation with incumbents like Salesforce building GenAI via Einstein GPT.

But functions like customer services, sales & marketing and software programming have been the guinea pigs of automation since the earliest days of intelligent automation, starting with RPA, IDP, followed by ‘low code no code’ technologies. The incremental value of automation, irrespective of how smart it is, is limited. Consequently, the impact of GenAI was nothing short of sobering.

But there is something deeper at play. There is growing sentiment that the underlying technology is not smart. It is essentially a next-word predictor. The GenAI does not understand the meaning of the language, instead, it converts the semantics and syntax of the language into statistics, which makes it spew statements that appear intelligent. Are the new releases of GPTs getting more intelligent or hacking it better?

GPT-1, introduced in 2018, was the first iteration of the GPT series and consisted of 117 million parameters. It showed unsupervised learning is possible, using books as training data to predict the next word in a sentence.

A year later, GPT-2 was released, with a significant upgrade — 1.5 billion parameters — and a marked improvement in text generation capabilities

GPT-3 launch in 2020 was a pivotal moment as the world started acknowledging this new frontier tech. Trained on a mind-numbing 175 billion parameters, its advanced text-generation capabilities led to widespread use in drafting emails, writing articles, poetry and generating code.

GPT-4, the latest iteration, continues this trend of exponential improvement such as ability to follow user intention, increased factual accuracy

But we must dig deep and one of the best sources of this is Daniel Jeffries.

wrote a fascinating piece on why LLMs are smarter than us but dumber than a cat. It is a large essay but a great read (takes a minimum of two sittings to assimilate the information !!).Couple of excerpts that I felt were relevant — the first on GenAI being nothing close to a general purpose machine but designed to work like a charm on narrow tasks.

You can tell your human co-worker: "I need you to go off and put together a complete plan for outreach to our potential customers, craft a list of needed assets, setup an outreach sequence and come up with a novel way to make those interactions feel more natural rather than doing cold email outreach."

They'll figure how to do it and all the complex intermediate steps to take along the way, while asking questions or getting the help they need to get it done if they don't understand each step.

An agent will hallucinate five to twenty steps into such an open ended task and go completely off the rails.

It will never figure out the right assets or where to look. It won't figure out how to find and set up the right outreach software and craft the message based on theory of mind (aka understanding other people) and how they will respond. It will fail in countless ways big and small. It just can't do this yet. Not with today's technology and probably not with tomorrow's either.

Here is another one on the problem of absurd reasoning.

My team is building agents. And every team I've talked to that's building agents has pivoted from "we'll build a general purpose agent" to building a "narrow agent" where they've drastically pruned the scope of their ambitions and surrounded a Multimodal Large Language Model (MLLM) with expert heuristics and guardrails and smaller models to compensate for the absurd reasoning of LLMs.

What do I mean by absurd reasoning?

I mean these machines completely lack basic common sense.

If you were naked and I told you to run down to the store you'd have the common sense to know you should probably put some cloths on, even though I never explicitly told you that.

Final Word

Generative AI is far from mimicking human learning. Furthermore, it has to be sentient and attain consciousness. At this point, this seems unattainable. Why? Because we humans have not yet worked it out, there is no chance we can impart it to the machines or, an even scarier version, we will not understand if and when machines are sentient because we don’t know what consciousness is.

It will be as much a race to build a general-purpose intelligent machine as it would be about the depths and nuances of human intelligence and consciousness.